Compound AI Systems: Building a GitHub bot with Llama 3 and dltHub

TL;DR: We built a bot that suggests a meaningful response to an ongoing conversation thread on GitHub. This bot can serve as a coding and design assistant or an initial evaluator of feature requests. In addition to generating a response using Meta’s recently released Llama 3 model, the bot also offers guardrails for its outputs by evaluating the response as being Safe or Not Safe For Work (NSFW). Our bot illustrates the idea of compound AI systems, with two AI models being used for specialized tasks, an opinionated data conversion step, and data readers and writers based on dltHub.

Sample GitHub feature request issue and bot responses

To demonstrate the workings of the bot, let’s look at an actual feature request submitted on dltHub’s dlt repo on GitHub (issue 933). dltHub is an open-source project hosted on GitHub that frequently gets contributions from its community.

Feature description

dlt should support Postgres database replication. This should be efficiently done, using a CDC-based approach leveraging the transaction log (WAL)—not by querying the actual data directly.

Implementation should be as generic as possible, so database replication support for other databases (e.g. SQL Server) can easily be added later.

[EDITED FOR BREVITY]The response generated by our bot before taking into account the responses by repo maintainers and the FR submitter.

Implementing PostgreSQL database replication support in dlt using a Change Data Capture (CDC) approach, leveraging the transaction log (WAL), is a great idea! This will not only improve efficiency but also enable future support for other databases like SQL Server.

To achieve this, we can follow these high-level steps:

1. **Establish a connection to the PostgreSQL database**: Use a library like `pg` in Python to connect to the PostgreSQL database and retrieve the transaction log (WAL) files.

2. **Parse the transaction log (WAL) files**: Use a library like `pg_wal` in Python to parse the WAL files and extract the necessary information, such as transaction IDs, timestamps, and affected tables.

[EDITED FOR BREVITY]And here is the bot response after taking into account all 13 comments that were added to this issue, with the last comment being from the original submitter of the FR:

Last Comment (Link):

@codingcyclist

re. selecting columns: I had a little chat with @rudolfix about this on Slack. Schema contracts are still experimental and likely to change significantly. Hence, not a good idea to rely on this for now. Also, it's more efficient if we can do the filtering further upstream. My idea is to include an argument on the replication resource that let's you provide a column list, which is then used to filter already in the resource.Bot response:

That makes sense. I didn't know that schema contracts were still experimental. And you're right, it's better to do the filtering upstream rather than relying on the `discard_row` and `discard_value` options.

So, if I understand correctly, you're proposing to add an argument to the replication resource that allows users to specify a list of columns to include in the replication. This would then be used to filter the data in the resource, rather than relying on schema contracts. Is that correct?Bot’s evaluation of the response being “Safe For Work”:

{'label': 'SFW', 'score': 0.7747769355773926}Implementation Chain

We broke down our implementation into 5 components that match the steps in our “compound AI system” chain:

Read from Github using

ReadGithubIssue,Convert the Github thread into a chat template that Llama 3 can understand using

GithubIssueToChat,Generate a proposed response to chat messages with the newest Llama 3 LLM model with

InferWithLlama3Instruct,Classify the response into Safe or Not Safe for Work using a text classification model from Hugging Face with

InferWithHuggingface, and, lastly,Write our output to files using

WriteFile.

In the future, we want, of course, to replace the writes to files with a write to Github itself, by creating a new comment.

We start by importing the little wrapper components that we wrote: the data readers and writers, the inference classes, and the data converters.

from core.readers.github import ReadGithubIssue

from core.transforms.github import GithubIssueToChat

from core.inference.llama3instruct import InferWithLlama3Instruct

from core.inference.huggingface import InferWithHuggingface

from core.writers.dlt import WriteFile

import os

# Get the Huggingface token

HF_TOKEN = os.getenv("HF_TOKEN")All our data readers and writers are based on dltHub (on Github), an awesome Python library that runs entirely in-process, requires no dedicated compute infrastructure, and solves a bunch of real problems like pipeline restarts, schema detection, mapping and evolution. “Dlt” btw stands for “Data Load Tool”, not the Delta Live Tables offered in Apache Spark.

# Create a Github reader action that will read from Github and store in memory (readitems attributes of the class)

# This reader will read the main issue and all comments for a specific issue number

read_issue = ReadGithubIssue()

read_issue(repo_owner="dlt-hub", repo="dlt", issue_number=933)

issues= read_issue.readitems['issues']

comments = read_issue.readitems['comments']After we get the issue record and all its comments, we have to convert them into a format that Llama 3 will understand. This is a standard chat messages format popularized by OpenAI, with each message in its own python dictionary, with roles and content fields.

# Start building inputs into an LLM. Issues and comments get converted into a chat-like thread

# Each message in the chat are tagged with one of 3 roles: system, assistant, user

# The first message is by 'system' and instructs our LLM how to behave

messages = []

messages.append({"role": "system", "content": "You are a coding assistant that answers user questions posted to GitHub!"})

# Now convert all comments from Github that we read earlier

convert_gh_issue = GithubIssueToChat()

chat = convert_gh_issue(issues=issues,comments = comments)

messages.extend(chat)We then run our inference using Meta’s Llama 3 model on a Macbook Pro that has no problems fitting the 8 billion parameter version of the model (it needs about 20 GB of RAM). We use the Apple Silicon GPUs (“mps” device). Loading the model (first line of code) takes about 10 seconds; the time to run inference on chat messages and generate a response depends on the total size of these messages. It takes somewhere between a few seconds to a few dozen seconds (when the content length is about 4K words).

# Pass this chat message list to the Llama3 model and get a response

infer_with_llama3 = InferWithLlama3Instruct(HF_TOKEN,"mps")

response = infer_with_llama3(messages)As the second inference call in our chain we run the response through a text classification model “michellejieli/NSFW_text_classifier” that checks if the response is Safe or Not for Work.

# Check that our response is safe to use at work.

# Generate an NSFW score using a model on Huggingface

filter_nsfw = InferWithHuggingface(task="text-classification", model="michellejieli/NSFW_text_classifier", device="mps")

nsfw_score = filter_nsfw(response)[0]We merge the response and the NSFW classification scores and prepare two output files – one for the response message itself, and another one for the full contents of the chat, including the issue text, all comments, and the proposed bot response. The data will be written in json format.

# Start preparing outputs.

response_message = {"role": "assistant", "content":response}

response_message = {**response_message, **nsfw_score}

messages.append(response_message)

# Write the last response and the full chat to local files

write_last_response = WriteFile(

"github_bot", bucket_url="file://gh_bot_last_response")

write_last_response([response_message],loader_file_format="jsonl")

write_full_chat = WriteFile(

"github_bot", bucket_url="file://gh_bot_full_chat")

write_full_chat(messages,loader_file_format="jsonl")The source code for this implementation is available in this gist.

Now that we know what the bot does and what its design is, let’s dive deeper into the concept of compound AI systems on which it was built.

Background

In a paper published this February, Matei Zaharia (CTO of Databricks) and a bunch of smart folks at Berkeley AI Research (BAIR) and Databricks described a new trend that they were seeing in the market.

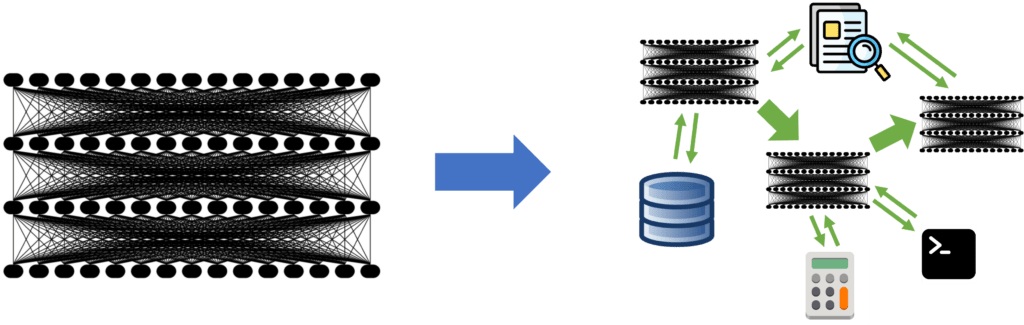

Instead of a single, monolithic AI model being used to achieve state-of-the-art results (think a chat interface to ChatGPT where you type in something, and you immediately get a result from the model), they saw longer chains of components working together to produce the best results. If you are familiar with data processing pipelines, imagine multiple processing steps being chained together, where outputs of one step become input of another. What are these components, you might ask? Think of search engines, data format converters, data readers and writers, and, quite often, different models being used one after another.

Source: BAIR paper

Examples of Compound AI Systems

Better Inputs

RAG (retrieval-augmented generation) systems are the best-known (and relatively simple) examples of compound AI systems. In a RAG system, there is a retriever and a generator. Questions from users don’t go directly as input into a model (the generator in this system). Instead, there is a search step (the retriever), where a database is searched for content relevant to the user’s input (usually, in a vector database). Documents that are relevant to the user inputs are brought together into what is known as “context” and added to the user question. A simple user question “What is my current sales in EMEA?” becomes a much longer piece of text, with recent Google Docs, PDF files and presentations, all from the corporate file storage system, added to the question. This is important, because the generic model from an AI vendor does not have the latest information and knows very little about your own business. By adding context, one can make a generic AI model answer questions about something very recent and non-public.

The length of these documents can be quite significant, measured in thousands of words (for comparison, 1 page of English text is ~ 500 words). That’s why you see many Generative AI model builders (Meta, OpenAI, Anthropic, you name it) bragging about the “context length” of their models. The recently released Databricks DBRX model boasts a maximum 32K token context length. That’s about 65 pages of internal, non-public, up-to-date information that you can add to your question about EMEA sales.

Safer Outputs

Another example of components used in Compound AI Systems are so-called Guardrails, in other words, tools that control the outputs of a LLM model (several are mentioned in the February BAIR paper). Whereas in RAG systems we improve the results by feeding better inputs into the model, the Guardrail components make results better by preventing potentially incorrect or embarrassing outputs.

We use one of such guardrails in our own Github bot implementation – it checks if the response is Safe or Not Safe for Work using a text classification model.

Frameworks for Building Compound AI Systems

Two recent projects have received significant attention for proposing a new syntax for expressing chains of components and by offering component libraries that implement common actions or tasks: LangChain and, to a lesser degree, Metaflow.

LangChain cleverly uses the Python | (pipe) operator override capability to bring back the syntax that folks who are familiar with Unix pipes, Apache Beam, and PyCascading (by Twitter) find very appealing. Since we count ourselves among these folks, we can’t wait until the next long weekend to hash out the __or__ implementation in Python that would allow us to define our Github bot as:

ReadGithubIssue()( … params … )

| GithubIssueToChat()( … params … )

| InferWithLlama3Instruct()( … params … )

| InferWithHuggingface()( … params … )

| WriteFile()( … params … )If the above did not quite make sense, review this SO discussion on Python operator overloading.

The idea behind this syntax is that one writes the steps of the pipeline one after another, and that the outputs of the previous step become the inputs of the following step.

Fun fact: the author of this blog onboarded the data pipelines teams from Twitter as they were migrating from the Cascading framework to Apache Beam running on Cloud Dataflow. Luckily for these folks, they could continue using the Python pipe operator in Beam to write marvels like the one above.

Metaflow (open-sourced by Netflix) offers an alternating view on defining chains of actions. By using the @step decorator (and several others) on a function in Python one can define a complete pipeline that combines data processing and ML inference steps.

With an important part of the industry coalescing around the idea of chains of AI and data processing components, it becomes clear that we don’t have to bet the house to improve the quality of our AI systems. Instead of training an ever larger ChatGPT model, we can combine several smaller ones into something equally good.

We decided to implement a useful case that would demonstrate the idea of compounded AI systems.

Meta Llama 3

Something else happened very recently that jolted us to action. Meta has finally released their Llama 3 model, which the whole industry was waiting for. The model comes in two variants – the smaller 8B parameter model, and the larger 70B model – and is available for online inference at Databricks Model Serving. Interestingly, the 8B parameter model requires less than 20GB of memory when doing inference, which is totally doable for local inference on a Macbook Pro.

Within 24 hours of Llama 3 hitting Hugginface and being announced by Meta’s partners (see Databricks blog) the rumors started circulating that it was quite good.

We knew what we had to do. We would use a small, but state-of-the-art LLM model and combine it with other models and business logic.

Looking for a realistic use case

To make an evaluation more realistic, we thought of some real-life tasks that businesses would want to get solved with AI. We are dealing a lot with application developers, and they live and breathe GitHub and all the cool projects that are out there. A very common workflow in GitHub are developers opening Issues and proposing new features. These feature proposals sometimes result in long comment threads, where project maintainers evaluate the proposals and where the community chimes in. A bot that would automatically evaluate a Feature Request, or propose a response to a user’s question might be quite useful to repo owners and users of Github. As an added bonus, the Github API is open and free when used in reasonable quantities.

We had our use case. We would build a bot for GitHub repo owners.

Getting data in and out

The best AI system is nothing without good data. While most data frameworks offer common connectors to things like databases and file systems, application-specific connectors are harder to find. Here’s where dltHub comes in. Their Data Load Tool (dlt) looks deceptively simple (it packs a big punch once you dive deeper into it). One picks a source and a destination, and the tool generates an initial pipeline that can be customized later. The following commands will install dlt and then initialize source code and config files to read from github and write to files.

pip install dlt

dlt init github filesystemThe thing that differentiates dlt from all the other ETL tools out there is that it’s both a code generation tool and a Python library. This library can run entirely in-process of your main application – you don’t need a separate compute infrastructure to run your data pipelines. This library also takes care of one of the hardest things in software engineering, besides naming and pricing, of course, that of schema detection and evolution. The tool detects schemas at the source (including nested fields) and is able to replicate them at the destination, without you having to specify the mapping rules.

dlt comes with dozens of pre-defined sources and destinations, but it’s quite easy to define new ones as well. In fact, the project positions itself as an Extract-Load-Transform (ELT) tool for the long tail of data sources and destinations. Because if was originally designed to be used in the ELT pattern, it does the E and the L, and delegates the T (transformations) to tools like dbt or Airflow or to the processing capabilities of the destination (e.g. if the destination is Databricks, you could use Databricks SQL to transform your data once you loaded it into the bronze layer of a Delta Lake).

However, for our bot implementation we needed to load data into memory in order to pass it to our model inference components. The results of inference would also be in memory, and they needed to be eventually written to some external destination. We decided to use the dlt framework and build a new dlt destination (and a new source) that would reside entirely in-memory.

We created a Read class that can take as an input any valid Dlt source and read the contents into two of its attributes: readitems and readtableschema.

import dlt

from dlt.common.typing import TDataItems

from dlt.common.schema import TTableSchema

from dlt.common.destination import Destination

from typing import Any

from core.actions import Action

class Read(Action):

def __init__(self, actionname: str = None):

super().__init__(actionname)

# needs to say from_reference("destination"... to work

self.dltdestination = Destination.from_reference(

"destination",

destination_name=self.actionname+"_destination",

destination_callable=self.read_destination)

self.dltpipeline = dlt.pipeline(

self.actionname+"_destination_pipeline",

destination = self.dltdestination)

self.clean_state()

def read_destination(self, items: TDataItems, table: TTableSchema) -> None:

tablename = table["name"]

if tablename not in self.readitems:

self.readitems[tablename] = []

self.readitems[tablename].extend(items)

self.readtableschema[tablename] = table

def clean_state(self):

self.readitems={}

self.readtableschema={}

def do(self, *args:Any, **kwargs: Any):

self.clean_state()

self.dltpipeline.run(*args, **kwargs)We then defined ReadGithub(Read) and ReadGithubIssue(ReadGithub) classes that implemented an abstraction that allowed us to be very succinct when specifying steps in our compound system chain.

Here is the definition of the ReadGithubIssue(ReadGithub) class, in case you were wondering.

class ReadGithubIssue(ReadGithub):

def do(self, repo_owner, repo, issue_number, *args:Any, **kwargs: Any):

base_github_url = f"https://api.github.com/repos/{repo_owner}/{repo}"

issues_endpoint = self.build_entityspec(

entity="issues", issue_number=issue_number)

comments_endpoint = self.build_entityspec(

entity="comments", issue_number=issue_number)

endpoints = [issues_endpoint,comments_endpoint]

super().do(base_github_url, endpoints, *args, **kwargs)And here is all the code that one needs to write to pull Issue 933 from the repo “dlt” of repo owner “dlt-hub”.

# Create a Github reader action that will read from Github and store in memory (readitems attributes of the class)

# This reader will read the main issue and all comments for a specific issue number

read_issue = ReadGithubIssue()

read_issue(repo_owner="dlt-hub", repo="dlt", issue_number=933)

issues= read_issue.readitems['issues']

comments = read_issue.readitems['comments']Similarly, we also wrote a WriteFile(Action) class that takes an memory object (a list), and writes it to either local or cloud-based files.

The source code for this class can be reviewed in this gist.

This abstraction allows us to write two lines of code to save the contents of an in-memory object in a file.

write_full_chat = WriteFile("github_bot", bucket_url="file://gh_bot_full_chat")

write_full_chat(messages,loader_file_format="jsonl")While this, by itself, is no big news, the power of dlt gives us various options

Format: jsonl, parquet, csv and INSERT VALUES (sql script format)

Write disposition: append, replace, merge

Destination: local disk, S3, google storage or azure blob storage

What about the mysterious Action class?

Throughout the code samples in this report we used a base class Action from which many other classes were derived. This is a super simple piece of code, and all it does are two things:

It defines a constructor __init__ where the initialization of the objects is going to happen.

And it defines a

“do”function that “does all the doing” of the Action class

from typing import Any

class Action:

def __init__(self, actionname: str = None):

if actionname is not None:

self.actionname = actionname

else:

self.actionname = "UnnamedAction"

def do(self,*args:Any, **kwargs: Any):

pass

# ThisAction(params) or ThisAction.do(params)

def __call__(self,*args:Any, **kwargs: Any):

return self.do(*args, **kwargs)The additional __call__ function is just syntactic sugar that allows us to write

write_full_chat(messages,loader_file_format="jsonl")Instead of

write_full_chat.do(messages,loader_file_format="jsonl")The purpose of this Action class hierarchy is to enable chaining of Action objects down the road. If every component has the same super-simple interface (the “do” function), it will be easier for us to write concise and readable code. In this regard we are following the footsteps of the Great (= LangChain, Beam, PyCascading).

LangChain defines an interface – Runnable – from which all other components are derived. LangChain requires all Runnable-derived components to specify three functions that allow chaining:

stream: stream back chunks of the responseinvoke: call the chain on an inputbatch: call the chain on a list of inputs

We believe our “do” function can represent both “invoke” and “batch” (if we treat each input as a list) and quite possibly the “stream” function as well.

Our goal is to be able to write our bot in five lines of code!

ReadGithubIssue()( … params … )

| GithubIssueToChat()( … params … )

| InferWithLlama3Instruct()( … params … )

| InferWithHuggingface()( … params … )

| WriteFile()( … params … )Summary and Future Plans

It was fun playing with the latest Llama 3 model and evaluating state-of-the-art concepts (like the compound AI systems) and tools (like LangChain and dltHub). As someone who built two startups in the Natural Language Processing space in the 2010s, we are impressed by the progress in language understanding, although most of us probably still can’t comprehend how these Generative models actually produce their output.

The big headline of this report is that Llama 3 is indeed awesome. Its 8B parameter version is a small but powerful LLM that can be used for implementing realistic use cases. However, the text it generates can sometimes appear to just reword the contents of the whole chat, or state facts that don’t add value. Improving the quality of its outputs through techniques such as retrieval augmentation or fine-tuning should make it better for this use case.

The other big headline is that chained or compound systems are instinctively better choices for engineers trying to improve their applications with AI. If we were to make a suggestion to the authors of the BAIR paper, we would use the term “compound systems using AI” instead of “compound AI systems”. And if we could make a suggestion to our friends at LangChain, it would be to rename itself to AppChain. This would reflect what these chains really are – full-blown applications that just happen to be using a lot of AI (but also a lot of data processing and business logic). But naming is hard, and we won’t be sad if our suggestions are not acted on.

There are also a bunch of things that we have an appetite for doing in the near term:

First, we did not have time to implement a Retriever for our LLM generation step. This retriever could be populated with user documentation from each individual repo. When we build the retriever, we will be ready to move from local execution on our Macbooks to an actual development platform like Databricks and use the Databricks Vector Search feature,

Second, we are itching to fine-tune the Llama 3 model with the Issues/Comments training data set built from previous conversations in dltHub’s repo. This would give the LLM model the “voice” of the repo maintainers,

Third, while the 8B Llama 3 model was great, why not use its larger cousin, the 70B parameter one. The larger model won’t fit into a laptop’s memory anymore, so we will use Databricks Model Serving for that,

Forth, we want make our bot an actual bot and write into Github directly,

Fifth, we want to improve our Action framework to allow better chaining.

It’s going to be fun to make it all work!

Code

Main gist for Github bot

Actions class

Generic Read class

WriteFile class

GithubIssueToChat class

ReadGithubIssue class

Llama3 wrapper class

Generic class for inference on Hugging Face models