Calculating per-job Cloud Dataflow costs - now possible with job labels

Ever wanted to track your resource usage and costs by specific Cloud Dataflow jobs? Cloud Dataflow recently started labeling billing records with Job Ids. Here is how to calculate you job costs.

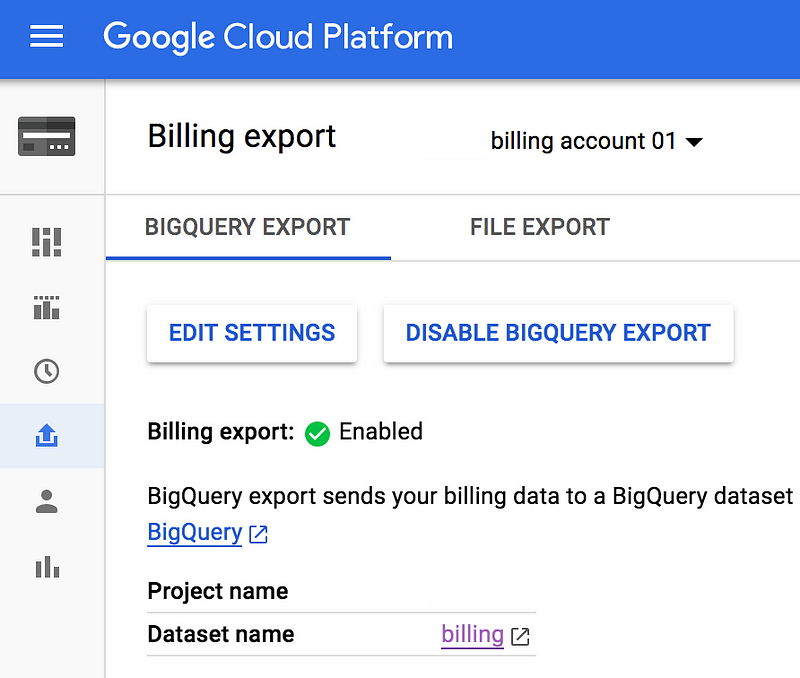

Enable billing export into BigQuery.

Go to Billing>Billing export in the Google Cloud Console.

Create a new BigQuery dataset, if needed, to store your billing data.

Give the billing export a little time to populate your dataset.

Then, in BigQuery, run this query:

#standardSQLSELECT l.value AS JobID, ROUND(SUM(cost),3) AS JobCost

FROM `[PROJECT].[DATASET].gcp_billing_export_v1_[account_id]` bill, UNNEST(bill.labels) l

WHERE service.description = 'Cloud Dataflow' and l.key = 'goog-dataflow-job-id'

GROUP BY 1You can get more than $$s from Cloud Dataflow billing exports. Each consumed SKU unit is labeled and you can calculate how many CPU hours, Memory GB hours, and other units you consumed per job using pretty much the same approach.

And the costs of my Dataflow jobs? They were in line with what I expected, but it was good to get reassured by real usage data.